WetGeek Despite having created a tutorial here for creating and using VMs, I still can't convince some of my friends to give them a fair try.

Although I use Gnome Boxes VM's frequently for "look-sees" preliminary evaluation (as you know), I will not use VM's for production or serious evaluation. A bare metal installation is sufficiently different than a VM environment so as to make VM use problematic for those uses cases, in my opinion.

WetGeek It would save SO much drama and misery for them, not to mention time spent fighting with dual-boot schemes and re-installing the systems that have crashed.

I have run numerous dual-drive, dual-boot, dual-partition instances over the last 3-4 years without a single problem or system crash. I've dual-booted Windows 10/11 with various Linux distros, and have similarly dual-booted different Linux distros without problems. So have others on this forum.

Right now, for example, I have Solus and UB 22.04 Beta dual booting on my Solus computer and Windows 10 and Zorin 16 dual booting on my "geezer support" computer. Before I installed UB on my Solus computer two weeks ago, I was running Solus and Windows 11 Beta on that computer. When I cut over, I removed the Windows 11 drive, disconnected the Solus drive, put in a clean drive, and installed UB on the clean drive. It really isn't rocket science.

The secret to making this work is to ensure that only the target drive is operative during installation, so that the OS being installed does not recognize the non-target drive(s) and cannot interfere with the non-target drives in any way. Some people achieve that by setting/unsetting/resetting flags, others by physically disconnecting non-target drives during installation (the method I use and is used by several members of this forum).

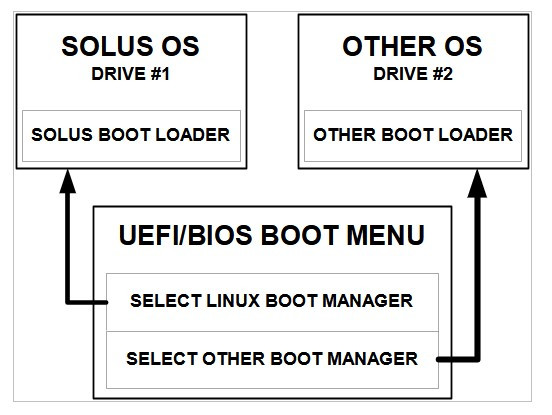

The basic principle is to set up an independent, segregated EFI partition on each drive, so that the bootloader for each OS never "sees" the other during the boot process. Boot selection is made at the UEFI/BIOS Boot Menu level.

As you have, I've seen reports of "SO much drama and misery" over the years created by dual booting. In my opinion, most of that drama and misery comes from (1) setting up dual boot on a single drive, (2) setting up dual-boot but not separating EFI partitions carefully enough, (3) forgetting to comply with the basic principles of EFI separation, for example, by not resetting UEFI/BIOS Boot Menu sequence to the Windows Boot Manager during "Patch Tuesday" updates, (4) attempting to use Grub or another boot manager instead of the UEFI/BIOS Boot Menu as the bootloader launch point, and (5) trying to multi-boot numerous Linux distros in a dual-boot setup.

Dual-booting is not a casual undertaking, and requires skill and attention to detail during the installation process as well as understanding of how the dual-boot works and applying that understanding during dual-boot use. But even relative simpletons like me can do it if we are paying attention.

WetGeek And I have absolutely zero fear that one system with ever interfere with the other. They can't possibly do that. In fact, I've implemented the option that allows the host and the VM to share a clipboard, so it's easy to pass things back and forth. Only one HDD or SSD is needed for your main system and all of your VMs.

That's advantage of VM use, of course, and I have no problem with the principle. VM's are the best method of installing OS's for preliminary evaluation. I use VM's for that purpose. However, VM's don't work as well for production environments or serious evaluation, though, because hardware interactions are skewed. How can an OS's interaction with a wifi adapter be evaluated if the OS is not directly using the adapter?

You and I will almost certainly never agree fully on this issue.