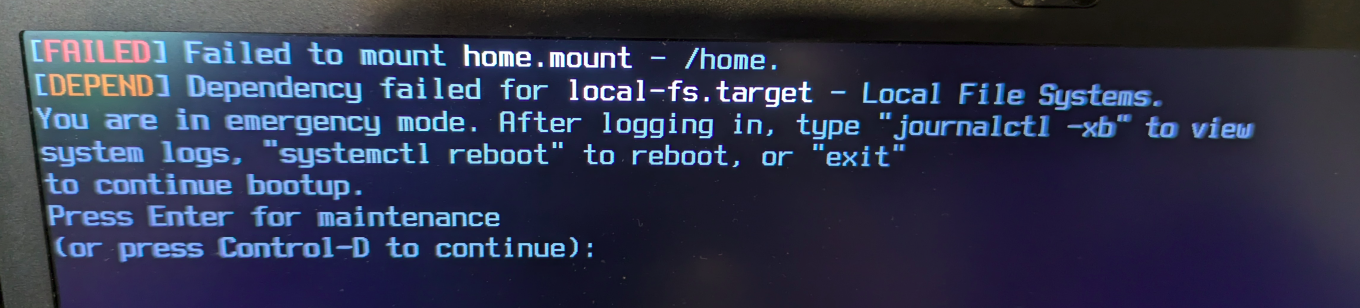

infinitymdm On my laptop there is additional weird behaviour occurring. Namely it is failing to mount the /home partition on startup around 50% of the time. If I just get it to reboot after the error it will boot just fine most times but sometimes it takes multiple attempts. Here is an image of the error:

And yes to be clear the other error is still persisting, this is as it appears on my laptop:

[✗] Updating clr-boot-manager failed

A copy of the command output follows:

[FATAL] cbm (../src/bootman/bootman.c:L562): FATAL: Cannot mount boot device /dev/nvme0n1p1 on /boot: No such device

[✗] Updating clr-boot-manager failed

A copy of the command output follows:

[FATAL] cbm (../src/bootman/bootman.c:L562): FATAL: Cannot mount boot device /dev/nvme0n1p1 on /boot: No such device

[✓] Running depmod on kernel 6.9.8-294.current success

Here is the output of sudo journalctl -k | grep nvme on my laptop (I will also respond with my desktops output in a few minutes):

Jul 19 15:42:35 solus kernel: nvme nvme0: 8/0/0 default/read/poll queues

Jul 19 15:42:35 solus kernel: nvme0n1: p1 p2 p3 p4

Jul 19 15:42:37 solus kernel: EXT4-fs (nvme0n1p3): mounted filesystem 9aa71c0a-9d55-4f79-b370-a3f554f8eb80 r/w with ordered data mode. Quota mode: none.

Jul 19 15:42:35 solus kernel: nvme nvme0: pci function 0000:3e:00.0

Jul 19 15:42:35 solus kernel: nvme nvme0: 8/0/0 default/read/poll queues

Jul 19 15:42:35 solus kernel: nvme0n1: p1 p2 p3 p4

Jul 19 15:42:37 solus kernel: EXT4-fs (nvme0n1p3): mounted filesystem 9aa71c0a-9d55-4f79-b370-a3f554f8eb80 r/w with ordered data mode. Quota mode: none.

Jul 19 15:42:38 matt-solus-t480s kernel: EXT4-fs (nvme0n1p3): re-mounted 9aa71c0a-9d55-4f79-b370-a3f554f8eb80 r/w. Quota mode: none.

Jul 19 15:42:38 matt-solus-t480s kernel: Adding 11718652k swap on /dev/nvme0n1p2. Priority:-2 extents:1 across:11718652k SS

Jul 19 15:42:40 matt-solus-t480s kernel: EXT4-fs (nvme0n1p4): mounted filesystem fa0ff32f-4b57-468f-981f-e79f6fed9aa7 r/w with ordered data mode. Quota mode: none.

What I find most bizarre is that the error is almost exactly replicated on both of my systems.